Imagine you get a phone call. The number is unknown, but you answer. You hear the voice of your spouse, your child, or your parent. They are panicked, perhaps crying. They say they’ve been in an accident, they’re in jail, or they’re in some kind of terrible trouble. They need money, right now, and they’re begging you to send it before something worse happens. Your heart hammers in your chest. You know that voice. You would recognize it anywhere.

But it’s not them.

You have just become the victim of a new, terrifyingly effective form of fraud, powered by a technology that has moved from science fiction to a readily available tool in late 2025. This is the world of AI voice cloning.

The ability for artificial intelligence to replicate a human voice is no longer a clumsy, robotic gimmick. It is no longer the realm of multi-million dollar-studios. As of today, the technology has become so advanced, so accessible, and so frighteningly accurate that it can be considered “perfect” for its intended use, whether that use is for good or for evil.

This revolution in voice synthesis, or “generative audio,” has created a perfect storm. It brings with it a wave of incredible opportunities for creativity, accessibility, and art, while simultaneously unleashing a Pandora’s Box of deepfake scams, sophisticated identity theft, and unprecedented tools for misinformation.

This article explores the new reality of AI voice cloning. We will dive into the technology that makes it “perfect,” explore the utopian applications that could change lives for the better, confront the dystopian nightmare of its weaponization, and finally, build a critical security playbook for how to protect yourself in a world where you can no longer believe what you hear.

How AI Achieves the “Perfect” Voice Copy

When we say a cloned voice is “perfect,” we are no longer just talking about the sound. For years, text-to-speech (TTS) has been robotic and flat. The breakthrough of modern AI is its ability to capture not just the timbre of a voice, but its prosody—the rhythm, the intonation, the pauses, the unique emotional color, and the subtle imperfections that make a human voice sound human.

It used to be that creating a high-quality “digital double” required a person to sit in a recording studio for dozens of hours, reading specific scripts to capture every possible sound and inflection. This was expensive and time-consuming, limiting its use to high-end applications like blockbuster films or GPS navigation systems.

That era is over. Today’s AI models operate on a new, far more powerful and data-efficient paradigm:

- One-Shot Learning: In many cases, an AI needs only a single sample of a voice—a few seconds from an Instagram story, a TikTok video, or a voicemail—to create a usable clone.

- Zero-Shot Learning: The most advanced models don’t even need a specific sample. You can simply describe a voice in text (“a deep, gravelly voice with a British accent, speaking in a hurried, anxious tone”) and the AI will generate it from scratch.

This low barrier to entry is what makes the technology so disruptive. Every time you speak in a public video, record a podcast, or even leave a company voicemail, you are creating the raw material that could be used to clone your voice.

The Science: From Waveforms to Deep Learning

The journey from a robotic “computer voice” to a perfect human copy is a story of a fundamental shift in AI.

A. The Old Way (Concatenative & Parametric TTS): The old methods were crude. Concatenative synthesis involved recording thousands of tiny sound snippets (phonemes) from a voice actor and then “stitching” them together to form new words. This is why old GPS voices sounded choppy—you were hearing a database of audio clips being reassembled.

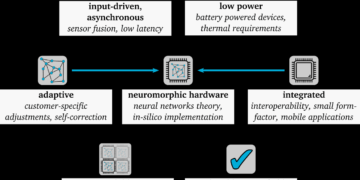

B. The New Way (Generative AI): Modern voice cloning uses neural networks that have been trained on a massive dataset of human speech, often called a “foundation model.” These models don’t just stitch old sounds; they generate new, unique audio waveforms from the ground up.

- Generative Adversarial Networks (GANs): This is a brilliant process where two AIs are pitted against each other. One AI, the “Generator,” creates a fake audio clip. The other AI, the “Discriminator,” acts as an expert critic and tries to determine if the clip is a real human or a fake. The Generator’s only goal is to fool the Discriminator. This adversarial cycle runs millions of times, forcing the Generator to become so good at creating audio that its output is indistinguishable from a real human, even to a highly-trained AI critic.

- Transformer Models: The same AI architecture that powers text-based models like ChatGPT is now being applied to audio. These models are exceptionally good at understanding context. When cloning a voice, a Transformer model understands not just the word “No,” but the context in which it’s said. Is it a short, angry “No!”? Is it a long, sad “No…”? Or a questioning “No?”? The AI can generate all these variations flawlessly.

- Diffusion Models: These cutting-edge models, which have taken over AI image generation, are now being used for audio. They work by taking pure noise (static) and slowly refining it, step by step, into a coherent, clean audio waveform that matches the target voice and emotion.

This combination of technologies is what allows a user to type a sentence, select a cloned voice, and choose an emotion—and hear the AI speak those words with a level of realism that can, and does, fool human ears.

The Utopian Dream: Voice Cloning for Good

The potential for this technology to do profound good is staggering. In a perfect world, AI voice cloning would be celebrated as a tool for creation, connection, and unprecedented accessibility.

A. Accessibility and Medical Miracles: This is, without a doubt, the technology’s most important application.

- Restoring Lost Voices: For patients suffering from conditions like ALS, throat cancer, or motor neuron disease, the loss of their voice is a devastating part of their illness. Previously, their only option was a generic, robotic text-to-speech device (like the one famously used by Stephen Hawking).

- Voice Banking: Now, a patient who receives a diagnosis can “bank” their voice. By recording just a few minutes of speech, an AI model can be trained on their unique vocal identity. As their illness progresses, they can continue to speak to their loved ones in their own voice through their computer or phone. This provides an immeasurable amount of dignity and humanity.

B. Entertainment and Media Creation: The creative industries are being completely reshaped by this technology, offering new levels of immersion and efficiency.

- Perfect Dubbing: For the first time, audiences can watch foreign films dubbed in the original actor’s voice. An AI can take Robert Downey Jr.’s voice, clone it, and have it speak flawless Mandarin, Spanish, or French, perfectly matching his tone and emotion.

- Dynamic NPCs in Gaming: Video game worlds are about to feel infinitely more alive. Instead of hearing the same 10 recycled lines of dialogue, an AI can generate new, unique dialogue for non-player characters (NPCs) on the fly, all in the voice of the original, high-profile voice actor.

- Efficient Content Creation: Podcasters and YouTubers can edit their audio simply by editing a text document. If they misspeak, they don’t need to re-record; they just delete the wrong word and type in the correct one, and the AI generates the audio in their voice.

C. Personalization and Digital Legacy: The technology also offers deep, personal connections.

- Digital Assistants: Why listen to the generic “Alexa” or “Siri” when your digital assistant could speak in the voice of your spouse, your parent, or a celebrity you admire?

- Digital Immortality: This is a more profound, and perhaps unsettling, application. People can create a “voice clone” of an aging relative. After they pass away, the AI can be used to narrate their old letters, journals, or even read bedtime stories to their grandchildren, creating a “digital legacy” that allows their voice to live on.

The Dystopian Nightmare: The Deepfake Threat

For every positive application, there is a dark, mirror-image negative. The same tools that restore dignity to a patient can be weaponized to strip a person of their security, their reputation, and their savings. This is the domain of the audio deepfake.

A. The “Vishing” Scammer and Emotional Fraud: This is the most immediate and visceral threat to the average person. “Vishing” (Voice Phishing) is supercharged by AI.

- The Family Emergency Scam: As described in our introduction, a scammer can scrape a 30-second audio clip of your child from their public Instagram profile. They call you, using a perfect clone of your child’s voice, faking a kidnapping or an arrest. In that moment of pure terror, your critical thinking evaporates. You will send the money. This scam is devastatingly effective and a multi-billion dollar criminal enterprise.

- Impersonating Authority: A scammer can clone the voice of a bank representative, a police officer, or a government tax agent, using the cloned voice to add a powerful layer of legitimacy to their demands.

B. Corporate and Financial Fraud (CEO Deepfakes): The business world is the new front line. The “CEO Fraud” scam has moved from fake emails to fake voices.

- The Urgent Wire Transfer: A high-level finance manager receives an “urgent” call on their cell phone. The caller ID is spoofed, and the voice is a perfect clone of the company’s CEO. The “CEO” says they are in a secret, time-sensitive M&A deal and need an immediate, “off-the-books” wire transfer of $5 million. The manager, hearing the authority and familiar stress in their boss’s voice, complies. This is not a hypothetical; this exact scenario has already led to the theft of tens of millions of dollars.

C. Weaponized Misinformation and Political Chaos: On a macro level, AI voice cloning is a direct threat to social stability and democracy.

- The Pre-Election Deepfake: Imagine a “leaked” audio recording released 48 hours before a major election. It’s a perfect clone of a presidential candidate “confessing” to a crime, accepting a bribe, or using hateful language. The clip goes viral. By the time it is forensically debunked as a fake, the election is already over. The damage is done.

- Eroding Trust in Everything: When any audio can be faked, what can we trust? A recording from a journalist, a whistleblower, or a court proceeding can be instantly dismissed as a “deepfake.” This creates a “liar’s dividend,” where even real evidence is ignored because the possibility of it being fake exists.

D. Personal and Reputational Ruin: The tools are now available for personal, malicious attacks. A stalker can clone their victim’s voice to harass their family and friends. An ex-partner can create fake audio “confessions” to destroy someone’s new relationship or get them fired from their job.

Your New Security Playbook: How to Protect Yourself

The era of “trusting your ears” is over. We must all adopt a new, more skeptical mindset. This is no longer just a technological problem; it is a human one.

A. The Human Firewall (Psychological Defense): Your brain is your first line of defense.

- Implement “Duress Codes”: This is the most effective low-tech solution. Establish a secret word or question with your immediate family (spouse, children, parents). This word is a “duress code.” If they ever call you in a “panicked” situation asking for money, you must ask them for the code. A scammer will be confused, get aggressive, or hang up. A real loved one will know the code.

- The “Call Back” Rule: If you receive an urgent, high-pressure request (from your boss, your bank, or a family member), hang up. Then, call that person back on a trusted number you already have in your contacts. Do not use the number they called from. If the emergency is real, you will be able to verify it in 30 seconds.

- Be Skeptical of Urgency: Scammers rely on panic. They create a false sense of urgency to prevent you from thinking critically. Any request that involves “right now,” “secret,” and “money” should be considered a massive red flag.

B. The Technological Defense (Building the “Digital Larynx”): As the problem is AI, the solution will also, in part, be AI.

- Audio Watermarking: The industry is developing technology that embeds an inaudible, persistent “watermark” into all real audio recordings. AI-detection tools can then scan a file, and if it lacks this watermark, it can be flagged as “synthetic.”

- AI-Detection Software: Cybersecurity firms are racing to build AI models that are trained to “hear” the subtle, microscopic artifacts that generative AI leaves behind. These services will be built into our phones and communications platforms, providing a “potential deepfake” warning in real-time.

- Control Your “Vocal Footprint”: Be conscious of how much of your voice you make public. Consider making social media accounts private. While it’s impossible to be silent, reducing the amount of high-quality, publicly available audio of your voice can make you a slightly harder target.

The Voice of the Future: Our Double-Edged Sword

The genie is not going back into the bottle. AI voice cloning technology will only get cheaper, faster, and better. We are at an inflection point. The same tool that will give a voice to the voiceless has also been handed to every scammer, propagandist, and predator on the planet.

This technology is a mirror, reflecting both the best of human ingenuity and the worst of human intentions. It has created a world where our voice—the very signature of our identity—is now a reproducible, editable, and stealable piece of data.

The challenge of the post-2025 era is not to stop this technology; that is impossible. The challenge is to adapt to it. We must build new social, legal, and personal frameworks to manage a reality where we can no longer trust our own ears. We must learn to verify, to question, and to protect our new “digital voice” with the same vigilance we use for our passwords and bank accounts.