Neuromorphic hardware, a revolutionary approach to computing architecture, is reshaping how machines process information by mimicking the human brain. This brain-inspired technology promises breakthroughs in artificial intelligence (AI), edge computing, robotics, and even neuroscience.

As demand increases for faster, more efficient, and more intelligent systems, neuromorphic computing is becoming a central focus in the tech world. In this article, we’ll explore the evolution of neuromorphic hardware, how it works, the key components behind it, its benefits, challenges, and what the future holds.

What Is Neuromorphic Hardware?

Neuromorphic hardware refers to computer architectures designed to replicate the structure and operation of the human nervous system, particularly the brain. These systems use analog or digital circuits to emulate neurons and synapses—the building blocks of the brain.

Unlike traditional Von Neumann architectures that separate memory and processing units, neuromorphic hardware integrates them, resulting in faster and more efficient information processing. This architecture is particularly well-suited for tasks like pattern recognition, real-time decision-making, and low-power AI inference.

The History and Evolution of Neuromorphic Computing

The concept of neuromorphic computing dates back to the 1980s, coined by Carver Mead, a pioneer in the field of VLSI (very-large-scale integration). Since then, the field has grown significantly:

A. 1980s – Foundational Concepts

Carver Mead introduces the idea of using silicon-based circuits to mimic neural architectures.

Focus on analog circuits that emulate basic neuron behavior.

B. 1990s – Early Developments

Neuromorphic engineering gains traction in academia.

Research emphasizes sensory processing models (vision, hearing).

C. 2000s – Rise of Digital Neurons

Shift toward digital implementations to improve reliability and scalability.

Development of neuron-synapse networks using standard CMOS technology.

D. 2010s – Industrial Adoption

Tech giants like IBM, Intel, and HP begin investing in neuromorphic hardware.

Projects like IBM’s TrueNorth and Intel’s Loihi mark significant milestones.

E. 2020s and Beyond

Neuromorphic chips move from research labs to commercial testing.

Integration with AI and edge computing accelerates.

How Neuromorphic Hardware Works

Neuromorphic systems simulate how neurons and synapses operate. Here’s a breakdown of the core components and processes:

A. Neurons

These are the basic units of computation. Neuromorphic chips contain thousands or millions of artificial neurons that fire when specific input thresholds are met, similar to biological neurons.

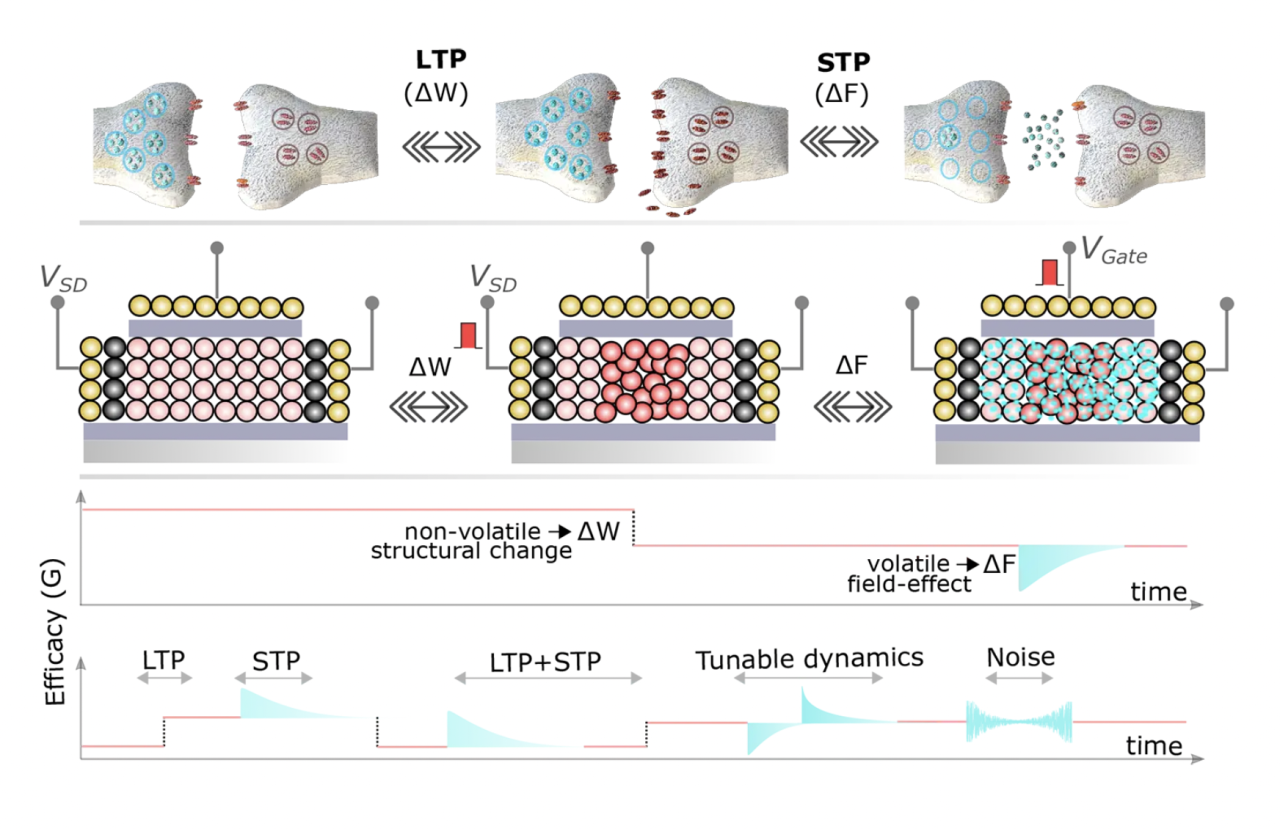

B. Synapses

Synapses connect neurons and control how signals pass between them. Neuromorphic hardware emulates synaptic plasticity, allowing the system to learn from data over time.

C. Spiking Neural Networks (SNNs)

Unlike traditional neural networks that process data continuously, SNNs operate using discrete spikes (binary signals), making them more power-efficient and biologically accurate.

D. Event-Driven Processing

Instead of running constantly, neuromorphic chips are event-driven—they only process data when necessary, saving power and resources.

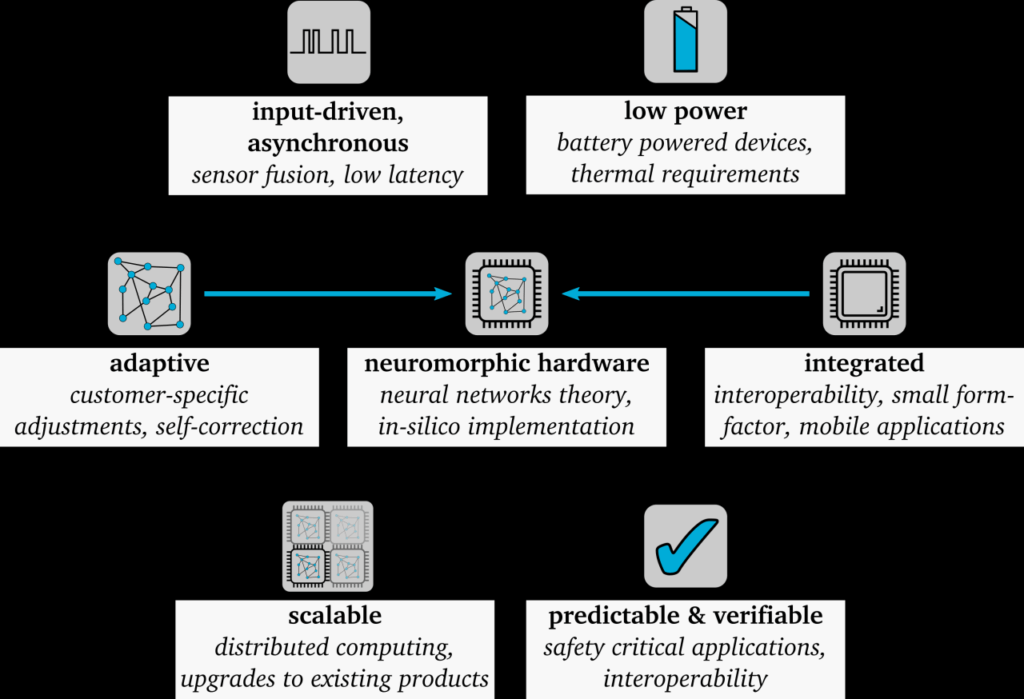

Key Technologies Behind Neuromorphic Computing

Several technologies support the development and efficiency of neuromorphic systems:

A. CMOS-Based Neurons

Many neuromorphic chips use CMOS transistors to simulate neuron behavior. This allows mass production using standard semiconductor techniques.

B. Memristors

Memristors are non-volatile memory elements that naturally mimic synaptic behavior. They can store and process information simultaneously.

C. Spintronics

Spintronic devices exploit the spin of electrons to process information, offering faster switching and lower power consumption.

D. 3D Integration

3D stacking of chips helps pack more neurons and synapses into a small space, mimicking the brain’s dense structure.

Leading Neuromorphic Hardware Projects

Several companies and institutions are leading the charge in neuromorphic hardware innovation:

A. IBM TrueNorth

Contains over 1 million neurons and 256 million synapses.

Extremely power-efficient and designed for real-time applications.

B. Intel Loihi

Features on-chip learning capabilities.

Designed for robotic control, pattern recognition, and autonomous systems.

C. BrainScaleS (Heidelberg University)

Analog/digital hybrid system.

Emulates brain behavior at accelerated time scales.

D. SpiNNaker (University of Manchester)

Uses ARM processors to simulate neural networks.

Scalable to billions of neurons.

E. HP’s Memristor-Based Chips

Focused on integrating memory and processing for neuromorphic efficiency.

Applications of Neuromorphic Hardware

Neuromorphic systems are unlocking new capabilities across various domains:

A. Robotics

Enables real-time sensory processing and motor control.

Ideal for autonomous navigation and human-robot interaction.

B. Edge AI

Low-power neuromorphic chips are perfect for IoT devices, drones, and wearables.

Performs real-time AI tasks without cloud reliance.

C. Healthcare

Potential for brain-computer interfaces (BCIs).

Used in prosthetics and neural rehabilitation tools.

D. Smart Cities

Power-efficient surveillance, traffic management, and environmental monitoring.

E. Defense and Aerospace

Used in low-latency systems for reconnaissance, target detection, and autonomous vehicles.

F. Neurological Research

Helps scientists model brain diseases like Alzheimer’s and Parkinson’s.

Assists in studying neural plasticity and cognitive behavior.

Advantages of Neuromorphic Hardware

Neuromorphic systems offer numerous benefits over traditional computing models:

A. Energy Efficiency

Spiking neural networks and event-driven processing significantly reduce power usage.

B. Real-Time Processing

Ideal for latency-sensitive applications like robotics and vision systems.

C. On-Device Learning

Supports adaptive learning, even without cloud connectivity.

D. Scalable Architecture

Can simulate millions of neurons and synapses, replicating complex neural functions.

E. Improved AI Capabilities

Excels in tasks like pattern recognition, anomaly detection, and predictive analytics.

Challenges in Neuromorphic Hardware Development

Despite the promise, there are several hurdles to overcome:

A. Lack of Standardization

Different designs and protocols make interoperability difficult.

B. Programming Complexity

Developing software for neuromorphic systems requires new tools and training.

C. Hardware Limitations

Physical limitations in simulating brain-like functions at full scale.

D. Commercial Viability

Still in the early stages of market adoption; limited real-world deployments.

E. Compatibility Issues

Difficult to integrate with existing machine learning frameworks.

Future Trends in Neuromorphic Hardware

Neuromorphic computing is rapidly advancing, and several exciting trends are emerging:

A. AI-Neuro Fusion

Combining neuromorphic hardware with advanced AI models for human-level intelligence.

B. Edge Integration

Expanding neuromorphic chips into smart home devices, autonomous cars, and wearables.

C. Neuromorphic-as-a-Service

Cloud platforms may offer neuromorphic processing as a subscription model.

D. Hybrid Architectures

Merging classical and neuromorphic computing for best-of-both-worlds performance.

E. Open-Source Ecosystems

Growing communities and platforms supporting neuromorphic programming and research.

Neuromorphic Hardware vs Traditional Computing

Here’s a side-by-side comparison of neuromorphic and conventional computing:

| Feature | Neuromorphic Hardware | Traditional Computing |

|---|---|---|

| Architecture | Brain-inspired | Von Neumann model |

| Processing Style | Parallel and event-driven | Sequential |

| Energy Efficiency | High | Moderate to high |

| Learning Capability | On-chip adaptive learning | Usually cloud-based |

| Ideal Use Cases | Real-time AI, robotics | General-purpose computing |

| Data Handling | Memory and processing combined | Separate memory and CPU |

Who Will Benefit from Neuromorphic Computing?

Businesses, researchers, and developers alike can gain from adopting neuromorphic technology:

A. AI Startups

Gain an edge by building lightweight, adaptive AI systems.

B. Healthtech Companies

Develop smart prosthetics and brain-machine interfaces.

C. Robotics Firms

Create faster, more responsive robots for industrial and service tasks.

D. IoT Developers

Deploy AI-powered edge devices with longer battery life.

E. Academic Institutions

Advance neuroscience and cognitive computing studies.

Conclusion: Neuromorphic Hardware is Reshaping the Future

Neuromorphic hardware represents a bold shift in how machines think, learn, and interact with the world. By mimicking the architecture and operation of the human brain, it offers a level of intelligence, adaptability, and efficiency that traditional systems struggle to achieve.

As we move into a future dominated by AI, robotics, and edge computing, neuromorphic systems will become essential tools. Industries that adopt this technology early will enjoy significant advantages in performance, innovation, and sustainability.

In short, the evolution of neuromorphic hardware is not just a technical trend—it’s the foundation for the next era of intelligent computing.